How To Get Rid Of Crawl Errors And 404 Not Found Errors in Google Webmaster Tool Reports

As a blogger or the webmaster of a website, one should be very serious regarding fixing broken links on his/her website. A lot of broken links, bad redirects and non existent 404 pages on your website does not reflect a good signal to the Googlebot and it can sometimes harm your site’s reputation on the eyes of a search engine.

As a blogger or the webmaster of a website, one should be very serious regarding fixing broken links on his/her website. A lot of broken links, bad redirects and non existent 404 pages on your website does not reflect a good signal to the Googlebot and it can sometimes harm your site’s reputation on the eyes of a search engine.

It’s very important to fix the broken links and bad redirects on your site for two reasons.

First, the visitors who click a referring link from an external website are most likely to close the browser window, as soon as they land in a 404 page on your site . Second, you are losing Google juice for the broken links because the Googlebot is unable to crawl the inner pages of your site when it crawls through a broken link or a bad redirect.

I take my Google webmaster crawl reports very seriously and try to fix the broken links as soon as I find them. If you are a newbie and need help fixing crawl errors in Google webmaster tools, here are a few things you should know and practice.

How To Find The Crawl Reports In Google Webmaster Tools

First you will need to verify your site by uploading a verification file on the root directory of your domain. If your blog in on Blogger or any of the free blog providers, you can add a unique meta verification tag instead.

I assume you have verified your site and have added an XML sitemap on Google Webmaster tools. If you haven’t, create an XML sitemap and submit the sitemap URL first

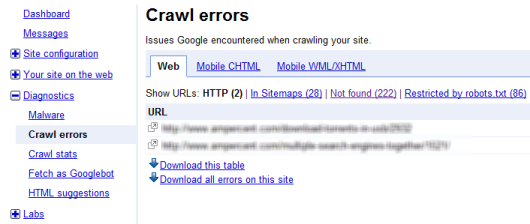

1. Login to your Google Webmaster tools account and go to the Crawl error reports page, as shown below

There are three type of crawl errors that are listed in Google Webmaster tool’s crawl reports.

- Crawl errors recceived through Sitemaps

- Crawl errors encountered while crawling through a link that returns a 404 not found page (on your blog or may be to an external website)

- URL’s restricted by Robots.txt

Understanding Robots.txt, Noindex And Nofollow For webpages

First you will need to understand how Google and other search engines crawl webpages, links and how you should tweak your site such that there are minimum number of 404 not found errors, while search bots crawl and dive deep within your website.

If you do not fully understand how web crawling works, fixing the errors wont help. This is because the errors will return back, if they are not fixed by following a correct convention.

Here are some conceptual points worth noting:

1. Google and other search bots crawl webpages through links. So if an external website is linking to one of the internal pages of your site, the target page will be crawled and indexed.

2. If an external website links to one of your pages with the “rel=nofollow” attribute, the page will still be crawled. So if a page A is linking to page B with the rel=nofollow attribute, it’s a way to tell search engines that “Hey, I do not want to pass PageRank or endorse this site to my readers.”

That never means that Google won’t “find” or “crawl” that page, if the incoming link is “rel=nofollow”. It’s just that it wont receive the PR juice from the external website which can help in search rankings for a given phrase or keyword.

3. The best way to prevent search engines from crawling or indexing a webpage is to use a Robots.txt file or password protect it. The former option is much more smooth and recommended.

4. You can also make use of the “noindex” meta tag in the header of a webpage. Using the “noindex,follow” meta tag for webpages tells search engines that

“I don’t want this particular page to be indexed and shown in search results. But if you are a bot and arrived to this page from any text link, please crawl all the links that are contained in this page and flow pagerank across all links”.

5. Using the “Noindex,Nofollow” meta tag tells search engines that

“I don’t want this particular page to be indexed and shown in search results and neither I want the bots to follow through the links that are contained in this page. It’s a dead end, please go back”

The Correct Way to Remove Crawl Errors

There is no hard and fast rule, the methods vary from one webmaster to another.

Step 1. Find The Sources Which Are Causing 404 Not Found Errors

The first step is the find the source of the “Not found” links.

Is it an external site linking to a non existent 404 page of your site ?

Is it one of the internal pages of your own domain ?

Is the link found from a search result page ?

Many possibilities.

Here are some examples:

Case 1: When an external site is linking to a Non Existent 404 page of your site

There is nothing you can do when someone else is linking to a wrong link.

You may either contact the webmaster of that site, but this is not a solution because in some cases you can’t convice the site admin to point to the correct link. Consider about social media and forum links, you can’t reach out to hundreds and thousands of sources every single day.

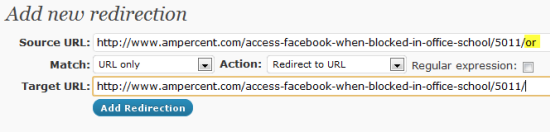

Solution: Install the Redirection Plugin and 301 redirect that bad link to the correct link yourself.

Thus, when the bots crawl the broken link from the external site, they will not get a 404 error and will land on the correct page. This technique has two advantages.

First, the human readers who spot your link on an external site come to the right page of your site and do not end up seeing the 404 page. Second, the bots will successfully crawl the external link and this will pass valuable Google juice to the original page of your site that’s in question.

Case 2: When an internal page is linking to a Non existent 404 page of your site

In case one of the internal pages of your site is linking to a nonexistent 404 page, you have the choice to edit the link and let it point to the correct source. There are two ways to achieve this

1. Open the source page in question and edit that link.

2. 301 redirect the bad link to the correct link.

Solution: I would prefer using the former option but in some situations you might want to use the redirection option. Examples: Permalink migration, category or tag base removed etc.

Case 3: When the Target Page No longer exists

What if the target page has been deleted and no longer exists ?

Suppose you wrote a blog post at www.domain.com/abcd.html which got 10 backlinks. After a few days, you deleted that page for whatever reasons. Now since external sites are pointing to that bad link, this will show a 404 crawl error on Google Webmaster tools. You can’t redirect the bad link to the correct one because the target page has been deleted anyway.

Solution: Use the Robots.txt file and completely disallow access to any search bot. Alternatively, create a dummy page e.g domain.com/no-longer-exists.html and redirect the source link to that page. Remember that the new page (e.g domain.com/no-longer-exists.html) should not return a 404 error.

Step 2: Fixing Broken Links From Spam Comments

As your blog grows and becomes more popular, spammers and imposters will try to make some profit by posting “Fake comments” such as “Hi great site. I am subscribing”.

As a rule, I simply delete such spam comments whenever I find that someone is trying to post HTML links in the comment form that has no relation with the article or discussion. Remember that the search bots can crawl links from HTML comment forms and other form fields

But there is a more severe problem.

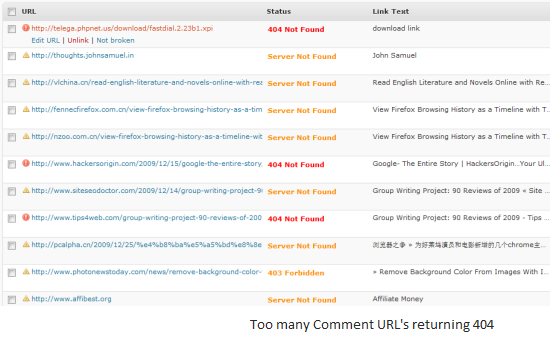

Let’s say Mr X posted a comment two years ago which was genuine. While posting the comment, Mr X used his website’s URL in the URL field of your site’s comment form. So far so good, but after two years, Mr X’s website was deleted for whatever reasons and his website now returns a “Server not found” message.

Result: When bots crawl the website URL link from the comments of your old blog post, they will show a “Not found” error.

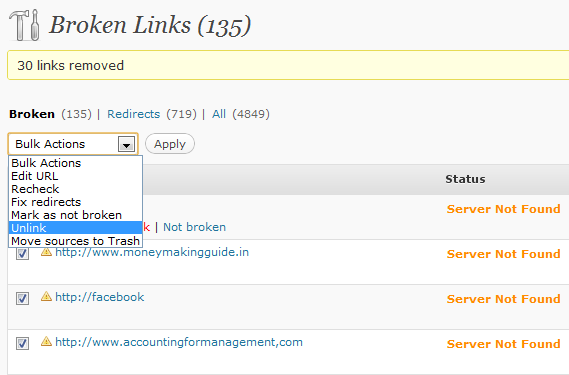

Solution: Install the Broken Link Checker WordPress plugin and scan your entire website for broken links. This includes links within comment forms, so you can quickly check comment links in bulk and remove all of the “Server not found” links in one shot.

Do not delete all those comments.

Do not delete all those comments.

Instead, select all the comments or trackbacks that return a 404 not found or server not found error and choose “Unlink”. Done !

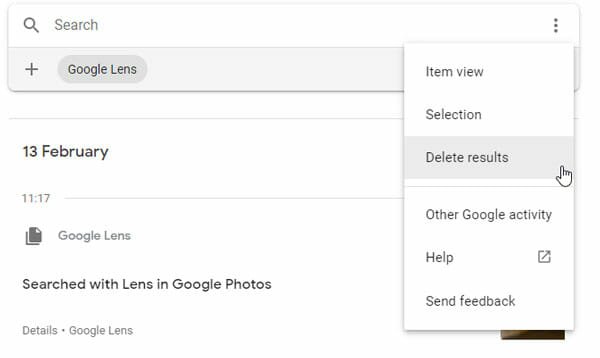

Step 3: Remove the Already Crawled URL’s from Google Webmaster Tools

When you have fixed all the broken links and bad redirects on your site, it’s time to request Google to remove the already crawled links that return a 404 and update it’s index.

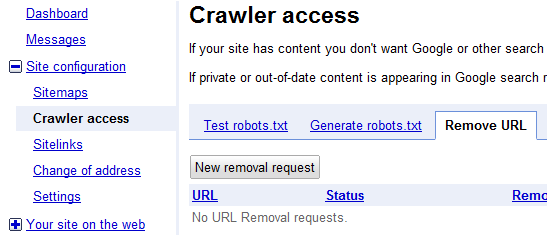

1. Go to Google Webmaster tools and find “Remove URL(s)” under Site configuration > Crawler Access > Remove URL. Then click “New Removal request”

2. Then open the “Crawl errors” page under Diagnostics, copy one of the broken links and paste it on the “Remove URL” text box.

3. In the next page, select “remove from search results and cache” and click “Submit”.

3. In the next page, select “remove from search results and cache” and click “Submit”.

You have to do this for all the URL(s) that are listed in the crawl error page of Google Webmaster tools crawl reports.

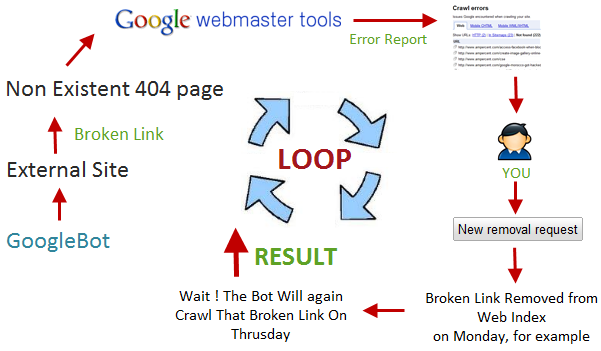

What Happens After You Submit The Remove URL Request

When you have submitted a new URL removal request, Google will remove the specific URL from it’s web index and cache index. But in future, if the Googlebot detects a text link pointing to the broken link, the bot will reindex the broken link again and show it as an error under your Google webmaster central reports.

This is the reason why you should first fix the broken links and bad redirects and then submit URl removal request. Because merely submitting a URL removal request won’t help either, the bots will still crawl that link which is broken in the first place and everything falls in an infinite loop.

In a Nutshell

To reduce the crawl errors, make sure of the following three things

1. First fix all the broken links on the pages of your own site that points to non existent pages of your site.

2. Next, remove the external links from your site that return a 404 or “server not found” error

3. If any external site is linking to a non existent page of your site, 301 redirect that bad link to the original page.

4. All set, now remove the bad URL’s from Google webmaster tools > Site configuration > Crawler Access > Remove URL.

Related reading:

1. WordPress to Blogger migration – retain Google PR and traffic

2. A Guide on Online Plagiarism: How to deal with spam blogs and duplicate content

Thanks for the valuable info….Now my question is if I have some broken link in my sitemap how can I remove it….I think it is a very basic question but I am kind of NOOB to website related stuff..Hope you understand… :P

I have 7 crawl errors on my site. I am a total rookie as well. I think they are due to my changing the name of several drop down titles on my home page. The crawler tries to crawl the old names. How do I fix that? Link to the new drop down name? How?

Thank you for this well written and easy to understand tutorial of how to remove those nasty crawl errors. It really helped me out a lot. Earned you a rare google+ from me.

i have about 1000 crawl error and those pages don’t even exist anymore…. how to REMOVE them permanently from google index? i have read your explanation and it is too long…i just don’t understand …. please help me :)